Are Your Employees Opening Your Company Up to Liability?

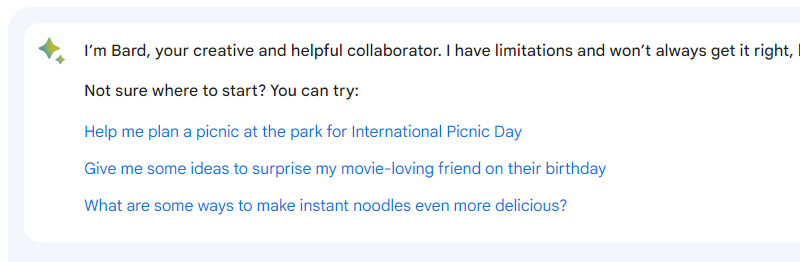

Alphabet, Inc. (Google) seems to find itself caught up in an unusual paradox. On the one hand, it is outwardly and aggressively marketing Bard, its own generative AI chatbot – while on the other hand, it is inwardly…but quietly…warning its own employees about the dangers of AI chatbots. This is according to a report by Reuters based on independent information from four different sources, each in a position to know.

See more on Google’s AI chatbot paradox

It seems somehow ironic, if not downright contradictory, that Google, one of the largest creators and promoters of artificial intelligence in general, and of generative AI chatbots specifically, finds it necessary to warn its own employees about the use of these tools on the company’s behalf. Yet while they quietly warn their own employees about these tools, at the same time the company is out in the marketplace aggressively pushing Bard as the next big thing for use by others. Are AI chatbots safe?

First, what is an AI chatbot?

A chatbot is a computer program that uses artificial intelligence (AI) and natural language processing (NLP) to understand customer questions and automate responses to them, simulating human conversation.

IBM Topics

Artificial Intelligence Has Been Around for Years, But Generative AI is New

Artificial intelligence has been around for years, largely in the form of voice control of basic system functions by Siri (Apple), Alexa (Amazon), and Google Assistant. However, generative AI took the concept to a whole new level. Since those early and simple iterations of a form of AI, multiple laboratories in Silicon Valley and around the world were diligently working behind the scenes to take AI to the next level.

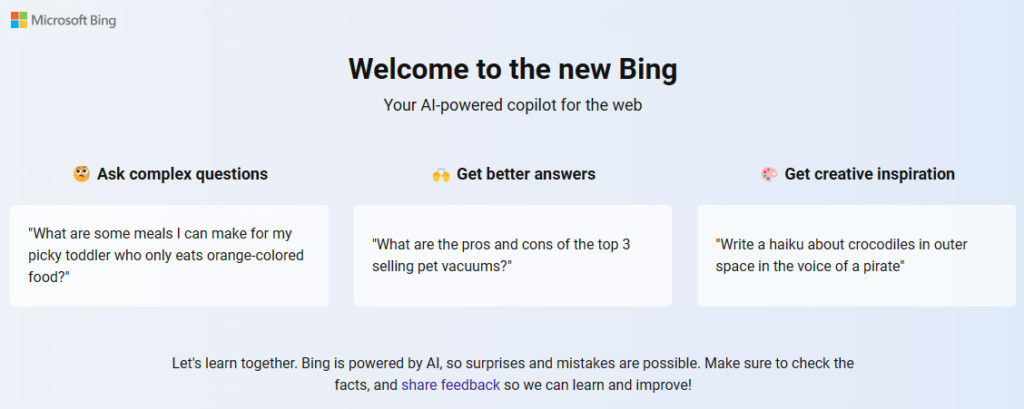

Then Microsoft stunned everyone by suddenly announcing this past February that it would be enhancing its Bing search engine – which has always lagged far behind Google Search – with new AI generated results, thanks to its investment in ChatGPT…a generative AI chatbot trained by scanning massive language datasets to recognize patterns it uses to generate uniquely created responses. The announcement shook Tech, and especially Google, to their foundation.

Microsoft: AI-Powered Bing is Your ‘AI Copilot’

Microsoft’s February announcement said, “Today, we’re launching an all new, AI-powered Bing search engine and Edge browser, available in preview now at Bing.com, to deliver better search, more complete answers, a new chat experience and the ability to generate content. We think of these tools as an AI copilot for the web.”

Within just a day or two of Microsoft’s launch, Google quickly announced Bard, its version of the Bing ChatGPT-based chatbot. In a blog post by CEO Sundar Pichai, he said: “Bard seeks to combine the breadth of the world’s knowledge with the power, intelligence and creativity of our large language models. It draws on information from the web to provide fresh, high-quality responses. Bard can be an outlet for creativity, and a launchpad for curiosity, helping you to explain new discoveries from NASA’s James Webb Space Telescope to a 9-year-old, or learn more about the best strikers in football right now, and then get drills to build your skills.”

An AI Chatbot Horse Race

Now, both Microsoft and Google are in a horse race to see which can gain greater adoption for its system. Keep in mind that search advertising generates billions of dollars a year in revenue for Google. Microsoft, whose Bing search is a perennial also-ran to Google, has already begun eating into Google’s search market share. Which of course means Google has much to lose.

So it’s interesting that as Google aggressively promotes its AI chatbot to gain adoption by more users and companies, it is behind-the-scenes warning its own employees about the dangers of this technology. It seems that they are downplaying these dangers to those they wish to use Bard, but raising red flags internally about the danger zones of AI chatbots.

Is the Text or Images Generated by Generative AI Truly Unique?

Reuters discovered, for example, that Google has advised their employees not to enter company confidential materials into AI chatbots in order to safeguard information. This may sound obvious to you, but keep in mind that early on it was believed that chatbots generated unique responses based on transforming all that it had learned from the language patterns in massive dataset scanning. Now, it is believed that occasionally it can reproduce source material from its training.

Alphabet has also instructed its engineers, the report says, “…to avoid direct use of computer code that chatbots can generate.” Yes, generating computer code is one of the uses of generative AI being embraced by many. But Google folks told Reuters that “Bard can make undesired code suggestions, but it helps programmers nonetheless.”

Generative AI Generates the Need for New Company Security Standards

According to the sources talking to Reuters, Google has concerns about potential, if unintended, business harm from Bard – which the company rushed into the market to compete with Microsoft. Also, Google is recognizing the need for a new standard of security for corporations – “namely to warn personnel about using publicly-available chat programs.”

In this latter case, Google is hardly alone. Reuters surveyed a range of large corporations and discovered that Samsung, Amazon, Deutsche Bank, and even Apple have all reportedly begun instituting new security standards in the use of generative AI.

Courts Instituting New Rules Regarding the Use of Generative AI in Filings

Also, courts around the country are beginning to issue new rules covering the use of generative AI in court filings. This rash of new rules has resulted from a well-publicized case in which an attorney used generative AI to research similar case citations for a legal brief. It was a well-written brief, but it had one very serious problem – the six cases it cited as precedents to support its legal arguments were all made-up, fake, non-existent cases.

Suddenly, the lawyer found himself the subject of a special court hearing to explain himself as attorneys for the other side of this case had notified the judge that the case citations were bogus. The judge considered serious sanctions against the attorney who told the judge that he was “unaware that its content could be false,” according to a report by the BBC.

As a result of this fiasco, an increasing number of courts are instituting new rules requiring lawyers to disclose if, and where, generative AI was used in the preparation of a brief.

This points to one of the main problems with generative AI – it often lies or makes things up that never existed. It’s a bit of a bug with AI chatbots that neither Microsoft’s ChatGPT-driven Bing, nor Google’s LaMDA-based Bard have yet completely solved. They solved their legal liability by adding a disclaimer, but users use these systems at their own risk.

Survey Shows 43% of Biz Pros Using Generative AI, Some Without Company’s Knowledge

A recent survey of business professionals (U.S.) in January showed that 43% of them were using ChatGPT or other similar services, often without telling their companies they were doing so. How many of these users understand that the information they enter in their prompts becomes part of the chatbot learning and is potentially exposed to others? How many of them understand that while answers to their prompts come quickly, they may include misinformation, sensitive data, or even copyrighted sections of protected documents or images?

“It ‘makes sense’ that companies would not want their staff to use public chatbots for work,” Reuters said that Yusuf Mehdi, Microsoft consumer marketing chief told them. “Companies are taking a duly conservative standpoint.”

Corporate Versions of Chatbots will NOT Input Your Data into Public Models

Both Microsoft and Google are reportedly working on a higher-priced corporate version of their AI chatbots that does not absorb entered data into the public AI models, which would help solve that issue. But in the meantime, be aware that the default settings of the publicly available AI chatbots is to save users’ conversation history – and input data into the public model.

User’s can opt to delete the conversation history, if they remember to do so.

Oh and by the way, our hapless lawyer friend who was called by the judge to a special hearing about his bogus case citations? He submitted a printout of his ChatGPT prompts where he asked the chatbot to verify that one of its citations was in fact real (showing consciousness of a problem) and ChatGPT replied multiple times that the case is real, saying it could be found in LexisNexis and Westlaw. It told him after “double checking” that the citation was a real case – lying to him over and over again. A convincing lie, I’d say.

Does Generative Really Save You Time?

The big advantage of generative AI is the quick generation of interesting materials. However, it seems the time saved on the front end with AI is offset by the time spent confirming that what it gave you is factual, accurate, and not a violation of anyone’s copyright.

Or…you could just research a subject and write about it yourself…

Learn more on Microsoft’s ChatGPT-powered Bing by following this link…

See more on Google Bard by clicking here…

Leave a Reply